Stereo shading reprojection

Stereo shading reprojection is a technique developed by Oculus to optimize virtual reality (VR) rendering. It achieves this by reprojecting rendered pixels from one eye to the other one, and the gaps are filled with an additional rendering pass. [1]

According to Oculus, this technique has worked well in a Unity sample for scenes that are pixel shader heavy, saving up to 20% on GPU performance. [1][2]

First-generation oculus virtual reality apps render the scene twice - one for each eye. Since the two images normally look similar, the Oculus researchers thought of sharing some pixel rendering work between both eyes. Stereo shading reprojection is a technique that makes this possible. [1]

Stereo shading reprojection is easy to add to Unity-based projects and could also be integrated with Unreal or other engines. This technology comes after the introduction, in 2016, of Asynchronous Spacewarp, also developed by Oculus. This was also a rendering technology that permitted the company to establish lower minimum specifications for the Oculus Rift. [1][2]

Theory[edit]

Virtual reality has high computational requirements and is very hard on graphics cards. An high-end graphics card is a requirement for most PC VR applications. Increased efficiency is desirable. It means that the minimum requirements for VR applications are reduced, making virtual reality available to more people. [2][3]

The idea behind Oculus stereo shading reprojection is simple: it uses similarities between the perspective view of each eye to lessen redundant rendering work. Normally for VR apps the images are rendered twice, one for each eye. With stereo shading reprojection, pixels are “rendered once, then reprojected to the other eye, to share the rendering cost over both eyes.” [2][3]

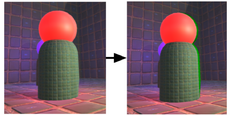

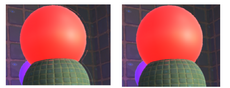

Since the eyes are separated by a short distance they see the environment from a slightly different perspective. However, the two different perspectives share some visual similarities which the stereo shading reprojection technique takes advantage of to avoid doing redundant rendering work for parts of the scene which have already been calculated for one of the eyes. According to Oculus engineers, stereo shading reprojection uses “information from the depth buffer to reproject the first eye’s rendering result into the second eye’s framebuffer.” The software also needs to take into account pixels that are visible that shouldn’t be, resulting from the different eye perspectives. Stereo shading reprojection has to identify which pixels are valid or not so that the invalid ones can be masked out to avoid artifacts and ghosting effect (Figure 1 and Figure 2). [1][2][3][4]

While the idea behind stereo shading reprojection is relatively simple, there are some points - made by Oculus - that need to be achieved so that it can be implemented and provide a comfortable VR experience:

- It is still stereoscopically correct, i.e. the sense of depth should be identical to normal rendering;

- It can recognize pixels not visible in the first eye but visible in the second eye due to slightly different points of view;

- It has a backup solution for specular surfaces that don’t work well under reprojection;

- It does not have obvious visual artifacts. [1]

Furthermore, they provide some suggestions to make the technology practical:

- [It should] be easy to integrate into your project;

- Fit into the traditional rendering engine well, not interfering with other rendering like transparency passes or post effects;

- Be easy to turn on and off dynamically, so you don’t have to use it when it isn’t a win. [1]

As a basic procedure for stereo shading reprojection rendering, Oculus writes that in a typical forward renderer:

- Render left eye: save out the depth and color buffer before the transparency pass;

- Render right eye: save out the right eye depth (after the right eye depth only pass);

- Reprojection pass: using the depth values, reproject the left eye color to the right eye’s position, and generate a pixel culling mask;

- Continue the right eye opaque and additive lighting passes, fill pixels in those areas which aren’t covered by the pixel culling mask;

- Reset the pixel culling mask and finish subsequent passes on the right eye. [1][2]

Oculus has noted that enabling stereo shading reprojection revealed savings of around 20% on rendering time, with the technique being very useful for scenes which are heavy in pixel shading. [2]

Limitations[edit]

Oculus has also listed some limitations of stereo shading reprojection:

- The initial implementation is specific to Unity, but it shouldn’t be hard to integrate with Unreal and other engines;

- This is a pure pixel shading GPU optimization. If the shaders are very simple (only one texture fetch), it is likely this optimization won’t help as the reprojection overhead can be 0.4ms - 1.0ms on a GTX 970 level GPU;

- For mobile VR, depth buffer referencing and resolving are slow due to mobile GPU architecture, which will add considerable overhead to the top, so we don't encourage trying the idea on mobile hardware;

- The optimization requires both eye cameras to be rendered sequentially, so it is not compatible with optimizations that issue one draw call for both eyes (for example, Unity’s Single-Pass stereo rendering or Multi-View in OpenGL);

- For reprojected pixels, this process only shades it from one eye’s point of view, which is not correct for highly view-dependent effects like water or mirror materials. It also won’t work for materials using fake depth information like parallax occlusion mapping; for those cases, we provided a mechanism to turn off reprojection;

- Using the depth rejection approach, as we did for our Unity implementation, the depth buffer will need to be restored after the opaque lighting pass. This can potentially hurt the transparency pass’s depth culling efficiency. [1]

References[edit]

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 1.7 1.8 Zang, J. and Green, S. (2017). Introducing stereo shading reprojection for Unity. Retrieved from https://developer.oculus.com/blog/introducing-stereo-shading-reprojection-for-unity/

- ↑ 2.0 2.1 2.2 2.3 2.4 2.5 2.6 Lang, B. (2017). Oculus’ new ‘stereo shading reprojection’ brings big performance gains to certain VR scenes. Retrieved from https://www.roadtovr.com/oculus-new-stereo-shading-reprojection-brings-big-performance-gains-certain-vr-scenes/

- ↑ 3.0 3.1 3.2 Hills-Duty, R. (2017). Oculus introduce new rendering technology for performance gains. Retrieved from https://www.vrfocus.com/2017/08/oculus-introduce-new-rendering-technology-for-performance-gains/

- ↑ Arguinbaev, M. (2017). Oculus introduces stereo shading reprojection to the Unity engine. Retrieved from https://thevrbase.com/oculus-introduces-stereo-shading-reprojection-to-the-unity-engine/