Redirected walking

Redirected walking is a possible solution to the problem of tracking physical distances in relation to virtual ones. This approach takes advantage of “people’s inability to detect small discrepancies between visual and proprioceptive sensory information during navigation”,[1] and it allows the user to turn and walk in the virtual environment (VE) using the body instead of a joystick while reducing the amount of physical space needed in relation to the virtual.[2]

According to Steinicke and others (2009), when humans can use only vision to judge their motion through a virtual scene they can successfully estimate their momentary direction of self-motion but are not as well in perceiving their paths of travel. By creating the right mismatches between the physical movement of the user and the visual consequence in the VE, the user can be steered towards the center of the tracking space, away from the edges of the room [1]. People don’t notice an increase or decrease in the virtual distance they have to walk, or if the virtual room is shifted so that they perceive their path as straight when in fact, the real path is curved. Even when the users turn their heads, if there is turn in the virtual space of 49 percent more or 20 percent less, this too will go unnoticed. As long as a movement is seen and sensed, the magnitude of that movement does not have to be precise [3]. It is the limitation in the human perception for sensing position, orientation and movement that are exploited by the algorithms of redirected walking [2].

Traditionally, the problem with exploring these VEs has been the fact that, in many existing VR systems, the user navigates the virtual world with hand-based control peripherals that control the direction, speed, acceleration and deceleration of movements, which decreases the sense of immersion. Other gadgets, such as treadmills, allow users to walk through VEs but even these do not allow for a great sense of immersion, since the user still has to change the direction manually. Various prototypes have been developed that try to improve walking as input to explore the virtual spaces such as omni-directional treadmills, motion footpads, robot tiles, and motion carpets. These systems, despite being technological achievements, have the disadvantage of being costly and hardly scalable (they support only one user walking), and as such are not good candidates for advancement beyond the prototype stage [4][5].[4][1]

The problem of how the user moves around when in VR is still unsolved in a total satisfactory manner, in order to maximize immersion [3]. Real walking is more presence-enhancing when compared to the other techniques described above and, as such, presents itself as a possible solution [6]. Presence can be defined as the subjective feeling of being in the virtual environment, and is important for VE applications to further engage the user in a credible virtual place [6][2]. Utilizing the user’s position and orientation tracking within a certain area, immersive virtual environments that use HMDs allows them to navigate through the virtual reality in a more natural manner. The position and orientation of the person are constantly updated, and the view in the HMD is correspondingly adjusted. However, it has been difficult to develop compelling large-scale VEs due to the limitations of the tracking technology (e.g. range) and access only to relatively small physical spaces in which the users can walk about [1][7]. This leads to a need of a system that provides the user to walk over large distances in the virtual world while physically remaining constrained to a relatively small place [4]. As an example, first-person video games in virtual reality would benefit of such technology by allowing gamers to experience the game immersively, not only because their field-of-view is that of the virtual character but also because their movements would be tracked in-game, allowing for the players to cover long distances in virtual reality while staying in an small physical area [6].

How redirected walking is achieved[edit]

The basic technique for redirected walking is to rotate the visual virtual scene around a vertical axis that is centered on the user’s head. When the user wants to walk in a straight line, he needs to turn physically to reach the goal. By inserting small rotations over time, the user is induced to return to the center of the physical area without realizing it. In this way, redirected walking allows for an increase in the amount of virtual space that can be simulated and traversed while the user is confined to a small physical area. This technique also prevents users from colliding with the walls of the room utilized [1][2]. Also, the rotation of the visual scene must not increase the simulator sickness of the users for it to be successful [2].

The achievement of redirected walking, with its correspondence between real and virtual movements that make the body function as an control peripheral, provides users with a rich spatial-sensory feedback that results in a greater sense of presence, and less of a chance of being disoriented in the VE. Indeed, according to Hodgson and Bachmann (2013), “virtual walking produces the same proprioceptive, inertial, and somatosensory cues that users experience while navigating in the real world.”

The biological basis for redirected walking can be seen in the phenomenon of when someone gets lost in the woods, for example, and walk in a circle without realizing it – even when trying to walk in a straight line [1]. Souman et al. (2009) proved this phenomenon by showing that the tested participants walked in circles when they could not see the sun, and even when the sun was visible (to provide some sense of orientation) the participants sometimes went off from a straight course, even though they did not walk in circles in this case [8].

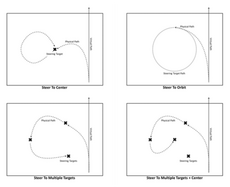

The determination of the space required and the maximum rates of imperceptible steering to effectively use redirected walking is not only dependent on the limits of human perception but also on some other relevant factors that may receive less attention. These can be the specific attention demands of the user task in the VE, adaptation of perception - that is dependent on the duration of sessions and the number of repeated sessions-, the nature of the VE (in relation to the proximity of objects and amount of optic flow), the individual differences between users, and the walking algorithms used. These algorithms are responsible for the imperceptible rotation of the virtual scene and the scaling of movements to guide the users away from the tracking area boundaries, permitting them to explore large virtual worlds while walking naturally in a physical limited space. In Hodgson and Bachmann (2013), four algorithms where tested: Steer-to-Center, Steer-to-Orbit, Steer-to-Multiple-Targets, and Steer-to-Multiple+Center (Figure 1). They concluded that Steer-to-Center tended to outperform the other algorithms at maintaining users in the smallest possible area [7].

Limitations of redirected walking[edit]

In order for redirected walking to be implemented as a widely used tool in VR it needs to be unnoticeable and users must not be distracted by it. It also must not increase the incidence of simulator sickness, and not interfere with spatial learning and memory. Studies that have examined the magnitude of redirection that can be done in a scene without being noticeable have suggested that between specific thresholds, there isn’t a higher incidence of simulator sickness. A more relevant limitation is the minimal physical space required for an effective redirected walking. Hodgson et al. (2011) suggests a tracking area with a diameter of at least 30m to more than 44m is necessary to simulate infinitely large VEs [1]. This is a problem that impedes a quick transition of the technology into the living room. Not only is the space available more limited but also there is still a need of an empty space (no furniture, for example). Besides this, it is still an expensive technology for the average consumer, needing a very good position tracking over a big space. If the technology involved in redirected walking keeps developing it might become a reality for the consumer, like the VR headsets [3].

References[edit]

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Hodgson, E., Bachmannm, E. and Waller, D. (2011). Redirected Walking to Explore Virtual Environments: Assessing the Potential for Spatial Interference. ACM Transactions on Applied Perception, (8)4

- ↑ 2.0 2.1 2.2 2.3 2.4 Razzaque, S., Swapp, D., Slater, M., Whitton, M. C. and Steed, A. (2002). Redirected Walking in Place. EGVE '02 Proceedings of the workshop on Virtual environments, pages 123-130

- ↑ 3.0 3.1 3.2 Cite error: Invalid

<ref>tag; no text was provided for refs named”1” - ↑ 4.0 4.1 4.2 Steinicke, F., Bruder, G., Ropinski, T. and Hinrichs, K. (2008). Moving Towards Generally Applicable Redirected Walking. Proceedings of the Virtual Reality International Conference (VRIC), pages 15-24

- ↑ Steinicke, F., Bruder, G., Jerald, J., Frenz, H. and Lappe, M. (2010). Estimation of Detection Tresholds for Redirected Walking Techniques. IEEE Trans Vis Comput Graph., 16(1): 17-27

- ↑ 6.0 6.1 6.2 Steinicke, F., Bruder, G., Hinrichs, K. and Steed, A. (2009). Presence-Enhancing Real Walking User Interface for First-Person Video Games. Proceeding of the 2009 ACM SIGGRAPH Symposium on Video Games, pages 111-118

- ↑ 7.0 7.1 Hodgson, E. and Bachmannm, E. (2013). Comparing Four Approaches to Generalized Redirected Walking: Simulation and Live User Data. IEEE Trans Vis Comput Graph., 19(4):634-43

- ↑ Souman, J. L., Frissen, I., Sreenivasa, M. N. and Ernst, M. O. (2009). Walking Straight into Circles. Current Biology, 19: 1538-1542