Redirected touching

Redirected touching is a technique in which virtual space is warped to map several virtual objects to one real object that serves as a passive haptic prop. The real hand motion is therefore mapped differently in relation to the virtual hand motion, introducing discrepancies that compensate for the differences between the real and virtual objects. Like Redirected Walking, this technique also uses errors in human perception to introduce discrepancies between the virtual environment (VE) and the real one. This technique would not be of use if the differential mapping would be noticeable to the user.[1][2]

Virtual environments and haptic feedback[edit]

A problem with virtual environments is that virtual objects cannot be felt. This lack of haptic feedback makes the interaction with the VE feel unnatural, possibly reducing the sense of presence [3]. Passive haptics (physical props) are a way to provide compelling touch feedback for virtual objects and are commonly used in VEs. The virtual objects are mapped to the real props, generally on a relation of one-to-one [1][2]. Providing haptic feedback significantly enhance a VE user’s experience, resulting in a more compelling experience because the user touches a real object [3]. However, passive haptics displays are inflexible. When changing an object in the VE, it is necessary to change the associated real object [4]. Evidence has suggested that passive haptics are generally preferred over absent touch feedback, providing also improvements in the performance of precision tasks as well as in spatial knowledge.

Although, traditionally, the objects between the VE and real-world have been mapped one-to-one (as can be seen in the example provided on figure 1), this mapping is not necessarily required. With redirected touching, a single physical object can provide haptic feedback for several virtual objects in the VE (figure 2) by warping the virtual space [1][3]. This introduces a discrepancy between the real and virtual hand motions. As an example, a physical flat table could provide haptic feedback for a sloped virtual table by showing the virtual hand moving along a slope while the user’s hand moves on the flat table (1). This technique exploits visual dominance in relation to other senses in order for a single real object to provide haptic feedback for many differently shaped virtual objects [1][2][5][3].

Visual dominance

As with redirected walking, redirected touching takes advantage of errors in human perception, in this case where the sense of touch and vision are concerned. According to Kohli et al. (2013), “vision usually dominates proprioception when the two conflict; people tend to believe their hand is where they see it, rather than where they feel it.” A person wearing distorting glasses when moving his hand along a straight surface will feel it as curved. Another example is that when subjects are holding an object through a cloth as they view the same object through distorting lens, they believe that the object they held was more similar to the image of the shape seen instead of the shape that they felt. In another study, subjects pushed a piston mounted on a passive isometric control peripheral with their thumb while they were shown a virtual spring that compressed as they applied force to the real piston. Although the real piston did not physically move, their perception of the spring stiffness was influenced by viewing the virtual spring (3). Finally, it has been shown that it is possible to influence the perception of elasticity of a physical elastic deformable surface by altering the amount of deformation that is made by the user’s hand on a virtual object [3].

Visual dominance is not, generally, complete. When exposed to real haptic and virtual cube-shaped objects with discrepant edge curvatures, the users perceive the curvature of the object to be intermediate between the two. Human sensory signals are weighted by their reliability: more reliable signals will be given more weight [1][5]. In this way, redirected touching leverages this visual dominance to allow for discrepancies between real and virtual objects to go unnoticed. As described above, this process is analogous with redirected walking, where a discrepancy is introduced between the user’s head rotation and the virtual head rotation, allowing for the exploration of large VEs while being in a smaller and limited physical location [5].

Achieving redirected touching[edit]

In Kohli (2010), it is described an implementation for redirected touching that is low-cost and quick-to-set-up passive haptics in order to provide haptic feedback to a user experiencing a VE. As explained above, the virtual space around an object will be warped, exploiting the user’s visual dominance. The user’s virtual hand will move in virtual directions that are different from the physical motion, so that the real and virtual hands reach their respective objects simultaneously. This system currently focus on finger-based interactions with VEs [6].

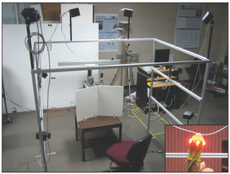

Regarding equipment for this specific setup used in Kohli (2010), there is a need for head tracking (3rdTech HiBall-3000 tracking system), finger tracking (PhaseSpace IMPULSE motion tracking system), and a system to communicate with the trackers (Virtual Reality Peripheral Network). The virtual environment is rendered using the Gamebryo game engine running on a dual quad-core 2.3GHz Intel Xeon machine with 8GB of RAM and an Nvidia GeForce GTX 280 GPU. The VE is presented on a head mounted display (NVIS nVisor SX HMD). The haptic feedback was provided by a 20”x30” low-cost foam board with two faces (figure 3). In order to achieve the goal of redirected touching, there is a need to know the user’s fingertip location, to have a representation of the physical object’s geometry, and a technique to map virtual objects onto the physical object. The determination of the physical geometry is achieved by the user pointing with the tracked finger to each corner of the real object. The system then interpolates the points to generate vertices for the physical geometry. It has to be noted that this technique works for physical objects consisting of planar facets. After capturing the physical geometry, “correspondences between points on its surface and predetermined points on the virtual surface are passed to the space warping system.” [6]

According to Kohli et al. (2012), to warp the virtual space “the surface of the real geometry must be mapped to the surface of the virtual geometry, while smoothly and minimally warping the rest of the space. Our system warps space using the well-known thin-plate spline technique commonly used in medical image analysis. A thin plate spline is a 2D interpolation method for passing a smooth and minimally bent surface through a set of points. The concept extends to higher dimensions; we use the 3D version.” [4]

Research questions[edit]

The development of redirected touching has led to several questions: Is discrepancy detectable? What kinds and amounts of introduced discrepancy would go unnoticed by users? Does discrepancy hurt task performance? Can users perform tasks with discrepant objects as well as they can with one-to-one objects? How does performance change as discrepancy increases? [1][2][5]. These have been researched mainly by Luv Kohli. Some of the research already done indicates that for certain tasks, task performance is not affected if a virtual object is warped or not. Also, the detection of discrepancy between what is seen and felt has been evaluated, suggesting that there is indeed a certain amount of discrepancy that is undetectable by the users [4]. Besides this, in tests to evaluate training and adaptation on a rapid aiming task in a real environment, an unwarped VE, and a warped VE led to the conclusion that training for the real task occurred in all conditions. Although training in the real condition was more effective, the results suggest that training with redirected touching transferred to the real world [2].

Applications[edit]

Redirected touching can be applied to the military aircraft pilots and maintenance crews training. These need to learn to perform cockpit procedures, like sequences of buttons and switches. The real aircraft and full simulators available to train the skills necessary have a great cost and are not available in deployed settings. Redirected touching can therefore “enable a single quickly set-up physical mockup to represent many virtual cockpits, eliminating the need to change the mockup for each new aircraft.” [1][5]

References[edit]

- ↑ 1.0 1.1 1.2 1.3 1.4 1.5 1.6 Kohli, L. (2013). Warping Virtual Space for Low-Cost Haptic Feedback. I3D ’13 Proceedings of the ACM SIGGRAPH Symposium on Interactive 3D Graphics and Games, pp. 195-195

- ↑ 2.0 2.1 2.2 2.3 2.4 Kohli, L., Whitton, M. C. and Brooks Jr., F. P. (2013). Redirected Touching: Training and Adaptation in Warped Virtual Spaces. Proc IEEE Symp 3D User Interfaces, pp. 79–86

- ↑ 3.0 3.1 3.2 3.3 3.4 Kohli, L. (2009). Exploiting Perceptual Illusions to Enhance Passive Haptics. Proc. of IEEE VR Workshop on Perceptual Illusions in Virtual Environments (PIVE), pp. 22-24

- ↑ 4.0 4.1 4.2 Kohli, L., Whitton, M. C. and Brooks Jr., F. P. (2012). Redirected Touching: The Effect of Warping Space on Task Performance. Proc IEEE Symp 3D User Interfaces, pp. 105-112

- ↑ 5.0 5.1 5.2 5.3 5.4 Cite error: Invalid

<ref>tag; no text was provided for refs named”3” - ↑ 6.0 6.1 Kohli, L. (2010). Redirected Touching: Warping Space to Remap Passive Haptics. 3D User Interfaces (3DUI), 2010 IEEE Symposium, pp. 129-130